前置准备

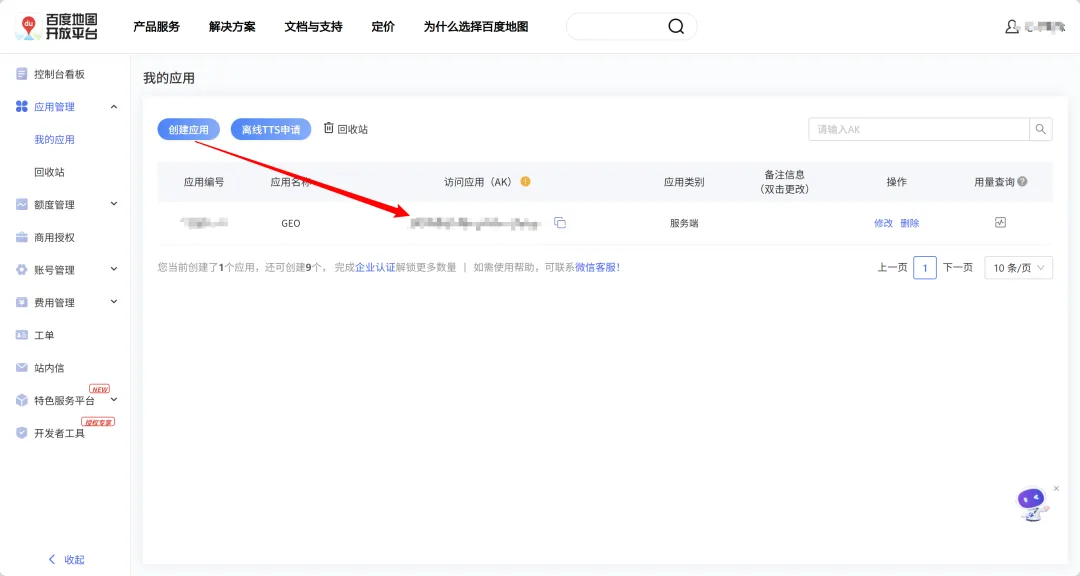

获取百度的APIkey

百度开发者平台

image.png

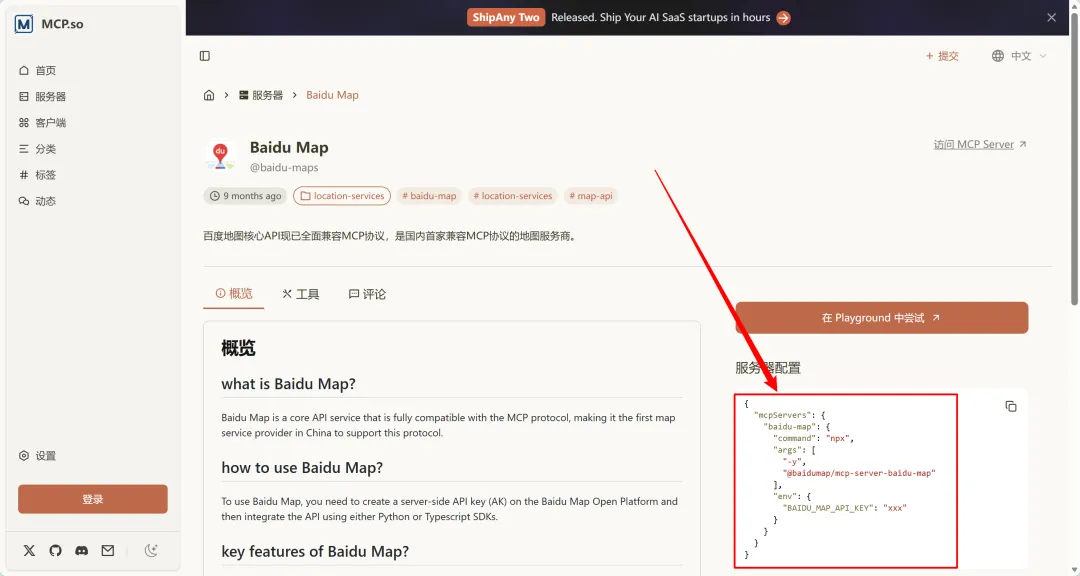

image.png获取百度MCP大模型配置

百度MCP服务

image.png

image.pngfrom langchain_core.messages import HumanMessage

from langchain_mcp_adapters.client import MultiServerMCPClient

from langchain_openai import ChatOpenAI

from fastapi import FastAPI

from pydantic import BaseModel

import uvicorn

app = FastAPI()

# 请求体

classChatRequest(BaseModel):

content: str

# 大模型配置

llm = ChatOpenAI(

base_url="大模型地址",

api_key="xxx",

model="Huihui-Qwen3-Coder-30B-A3B-Instruct-abliterated-gptq-w4a16",

temperature=0.7,

)

# 百度地图MCP配置

mcp_config = {

"baidu-map": {

"command": "npx",

"args": ["-y", "@baidumap/mcp-server-baidu-map"],

"env": {"BAIDU_MAP_API_KEY": "百度开发者平台apikey"},

"transport": "stdio"# 这个需要额外注意,如果是参数类型调用需要使用stdio

}

}

@app.post("/chat")

asyncdefchat(request: ChatRequest):

"""聊天接口 - 调用百度地图"""

# 1. 创建MCP客户端,获取工具

client = MultiServerMCPClient(mcp_config)

tools = await client.get_tools()

# 2. 绑定工具到大模型

llm_with_tools = llm.bind_tools(tools)

# 3. 调用大模型

response = await llm_with_tools.ainvoke([HumanMessage(content=request.content)])

# 4. 如果大模型想调用工具

if response.tool_calls:

tool_call = response.tool_calls[0]

tool_name = tool_call["name"]

tool_args = tool_call["args"]

# 找到对应的工具并执行

for tool in tools:

if tool.name == tool_name:

tool_result = await tool.ainvoke(tool_args)

# 把工具结果再发给大模型,让它总结回复

final_response = await llm.ainvoke([

HumanMessage(content=request.content),

response,

HumanMessage(content=f"工具返回结果: {tool_result}")

])

return {"response": final_response.content}

# 5. 如果不需要调用工具,直接返回

return {"response": response.content}

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=8000)