Linux KVM在ARM64环境多VCPU的演示

- 2026-02-05 22:49:44

当Guest运行过程中产生了一个异常(比如访问了不存在的硬件地址),硬件会自动触发“异常向量表(Vector Table)”。KVM会在EL2设置一套专门的向量表。当 Guest“翻车”陷出时,CPU会自动跳转到KVM预设的代码,这部分代码将加载Linux thread的环境,随后KVM_RUN返回到Linux thread用户态部分,由用户态进行“翻车”的处理。

多个VCPU的时候原先的Guest里面Hello world示例需要进行修改。汇编部分,会对两个VCPU根据CPU ID设置不同的Stack:

.global _start_start:/* Read MPIDR_EL1 to get CPU ID */mrs x0, mpidr_el1 /* Read Multiprocessor Affinity Register */and x0, x0, #0xFF /* Extract Aff0 (CPU ID) *//* Calculate stack pointer for this CPU *//* CPU 0: 0x04020000, CPU 1: 0x04030000, etc. */ldr x30, =stack_top /* Base stack address */mov x1, #0x10000 /* 64KB stack per CPU */madd x30, x0, x1, x30 /* stack_top + (cpu_id * 0x10000) */mov sp, x30 /* Set stack address */bl main /* Branch to main() *//* After main returns, enter WFI loop instead of shutting down *//* This allows all VCPUs to complete their work */sleep:wfi /* Wait for interrupt */b sleep /* Endless loop */.global system_offsystem_off:ldr x0, =0x84000008 /* SYSTEM_OFF function ID - shutdown entire VM */hvc #0 /* Hypervisor call */b sleep /* Fallback to sleep if HVC returns */

而system_off过程不会被调用,这是因为一个VCPU调用它以后,将关闭整个System,这将影响另外一个正在运行的VCPU的过程。所以这里bl main,在打印完成后,进入到wfi状态。

hello_world.c将分别往不同UART打印内容C0!或者C1!,这里减少了字符串长度使得输出简略些:

volatile unsigned int * const UART0DR = (unsigned int *) 0x10000000;volatile unsigned int * const UART1DR = (unsigned int *) 0x10008000;voidprint_uart0(constchar *s){while(*s != '\0') { /* Loop until end of string */*UART0DR = (unsigned int)(*s); /* Transmit char */s++; /* Next char */}}voidprint_uart1(constchar *s){while(*s != '\0') { /* Loop until end of string */*UART1DR = (unsigned int)(*s); /* Transmit char */s++; /* Next char */}}// Read MPIDR_EL1 to get CPU IDunsignedlongget_cpu_id(){unsigned long mpidr;asmvolatile("mrs %0, mpidr_el1" : "=r" (mpidr));return mpidr & 0xFF; // Extract Aff0 field (CPU ID)}voidmain(){unsigned long cpu_id = get_cpu_id();if (cpu_id == 0) {print_uart0("C0!\n");} else if (cpu_id == 1) {print_uart1("C1!\n");} else {print_uart0("C?\n");}}

kvm_test.c在main里面会创建两个VCPU。这两个VCPU,只需要进行一次的Memory mapping设置,因为这个属于VM范畴的资源。对每个VCPU要分别设置它们的CPU ID寄存器MPIDR_EL1和PC寄存器:

intmain(){int ret;uint64_t *mem;size_t mmap_size;/* Get the KVM file descriptor */kvm = open("/dev/kvm", O_RDWR | O_CLOEXEC);if (kvm < 0) {printf("Cannot open '/dev/kvm': %s", strerror(errno));return kvm;}/* Make sure we have the stable version of the API */ret = ioctl(kvm, KVM_GET_API_VERSION, NULL);if (ret < 0) {printf("System call 'KVM_GET_API_VERSION' failed: %s", strerror(errno));return ret;}if (ret != 12) {printf("expected KVM API Version 12 got: %d", ret);return -1;}/* Create a VM and receive the VM file descriptor */printf("Creating VM\n");vmfd = ioctl_exit_on_error(kvm, KVM_CREATE_VM, "KVM_CREATE_VM", (unsigned long) 0);printf("Setting up memory\n");/** MEMORY MAP* One memory block of 0x1000 B will be assigned to every part of the memory:** Start | Name | Description* -----------+-------+------------* 0x00000000 | ROM |* 0x04000000 | RAM |* 0x04010000 | Heap | increases* 0x0401F000 | Stack | decreases, so the stack pointer is initially 0x04020000* 0x10000000 | MMIO | UART0* 0x10008000 | MMIO | UART1*/check_vm_extension(KVM_CAP_USER_MEMORY, "KVM_CAP_USER_MEMORY");/* ROM Memory */memory_mappings[0].guest_phys_addr = 0x0;memory_mappings[0].memory_size = MEMORY_BLOCK_SIZE;mem = allocate_memory_to_vm(memory_mappings[0].memory_size, memory_mappings[0].guest_phys_addr);memory_mappings[0].userspace_addr = mem;/* RAM Memory */memory_mappings[1].guest_phys_addr = 0x04000000;memory_mappings[1].memory_size = MEMORY_BLOCK_SIZE;mem = allocate_memory_to_vm(memory_mappings[1].memory_size, memory_mappings[1].guest_phys_addr);memory_mappings[1].userspace_addr = mem;ret = copy_elf_into_memory();if (ret < 0)return ret;/* Heap Memory */mem = allocate_memory_to_vm(MEMORY_BLOCK_SIZE * 2, 0x04010000);/* Stack Memory - allocate 64KB (0x10000) per CPU *//* CPU 0 stack: 0x04020000, CPU 1 stack: 0x04030000 */mem = allocate_memory_to_vm(MEMORY_BLOCK_SIZE * NUM_VCPUS, 0x04020000);/* MMIO Memory - UART0 */check_vm_extension(KVM_CAP_READONLY_MEM, "KVM_CAP_READONLY_MEM"); // This will cause a write to 0x10000000, to result in a KVM_EXIT_MMIO.mem = allocate_memory_to_vm(MEMORY_BLOCK_SIZE, 0x10000000, KVM_MEM_READONLY);/* MMIO Memory - UART1 */mem = allocate_memory_to_vm(MEMORY_BLOCK_SIZE, 0x10008000, KVM_MEM_READONLY);/* Get CPU information for VCPU init */printf("Retrieving physical CPU information\n");struct kvm_vcpu_init preferred_target;ioctl_exit_on_error(vmfd, KVM_ARM_PREFERRED_TARGET, "KVM_ARM_PREFERRED_TARGET", &preferred_target);/* Enable the PSCI v0.2 CPU feature, to be able to shut down the VM */check_vm_extension(KVM_CAP_ARM_PSCI_0_2, "KVM_CAP_ARM_PSCI_0_2");preferred_target.features[0] |= 1 << KVM_ARM_VCPU_PSCI_0_2;/* Get VCPU mmap size */ret = ioctl_exit_on_error(kvm, KVM_GET_VCPU_MMAP_SIZE, "KVM_GET_VCPU_MMAP_SIZE", NULL);mmap_size = ret;if (mmap_size < sizeof(struct kvm_run))printf("KVM_GET_VCPU_MMAP_SIZE unexpectedly small");uint64_t entry_addr = get_entry_address();printf("Entry address: 0x%08lX\n", entry_addr);/* Create and initialize VCPUs */for (int i = 0; i < NUM_VCPUS; i++) {printf("\nCreating VCPU %d\n", i);vcpus[i].vcpu_id = i;vcpus[i].mmio_buffer_index = 0;memset(vcpus[i].mmio_buffer, 0, MAX_VM_RUNS);/* Create a virtual CPU and receive its file descriptor */vcpus[i].vcpufd = ioctl_exit_on_error(vmfd, KVM_CREATE_VCPU, "KVM_CREATE_VCPU", (unsigned long) i);/* Map the shared kvm_run structure and following data. */void *void_mem = mmap(NULL, mmap_size, PROT_READ | PROT_WRITE, MAP_SHARED, vcpus[i].vcpufd, 0);vcpus[i].run = static_cast<kvm_run *>(void_mem);if (!vcpus[i].run) {printf("Error while mmap vcpu %d\n", i);return -1;}/* Initialize VCPU */printf("Initializing VCPU %d\n", i);ioctl_exit_on_error(vcpus[i].vcpufd, KVM_ARM_VCPU_INIT, "KVM_ARM_VCPU_INIT", &preferred_target);/* Set MPIDR_EL1 register to identify CPU ID */check_vm_extension(KVM_CAP_ONE_REG, "KVM_CAP_ONE_REG");uint64_t mpidr_value = 0x80000000 | i; // Set CPU ID in Aff0 fielduint64_t mpidr_id = KVM_REG_ARM64 | KVM_REG_SIZE_U64 | KVM_REG_ARM_CORE | (2 * (offsetof(struct kvm_regs, regs) / sizeof(__u32)) + 5); // MPIDR_EL1 offsetstruct kvm_one_reg mpidr_reg = {.id = mpidr_id, .addr = (uint64_t)&mpidr_value};printf("Setting MPIDR_EL1 for VCPU %d to 0x%08lX\n", i, mpidr_value);ret = ioctl(vcpus[i].vcpufd, KVM_SET_ONE_REG, &mpidr_reg);if (ret < 0) {printf("Warning: Could not set MPIDR_EL1 for VCPU %d (this is normal, KVM sets it automatically)\n", i);}/* Set program counter to entry address */uint64_t pc_index = offsetof(struct kvm_regs, regs.pc) / sizeof(__u32);uint64_t pc_id = KVM_REG_ARM64 | KVM_REG_SIZE_U64 | KVM_REG_ARM_CORE | pc_index;printf("Setting program counter for VCPU %d to entry address 0x%08lX\n", i, entry_addr);struct kvm_one_reg pc = {.id = pc_id, .addr = (uint64_t)&entry_addr};ret = ioctl_exit_on_error(vcpus[i].vcpufd, KVM_SET_ONE_REG, "KVM_SET_ONE_REG", &pc);if (ret < 0)return ret;}/* Create threads for each VCPU */pthread_t threads[NUM_VCPUS];printf("\nStarting VCPU threads\n");for (int i = 0; i < NUM_VCPUS; i++) {ret = pthread_create(&threads[i], NULL, vcpu_thread, &vcpus[i]);if (ret != 0) {printf("Failed to create thread for VCPU %d: %s\n", i, strerror(ret));return -1;}}/* Wait for all VCPU threads to complete */printf("Waiting for VCPU threads to complete\n");for (int i = 0; i < NUM_VCPUS; i++) {ret = pthread_join(threads[i], NULL);if (ret != 0) {printf("Failed to join thread for VCPU %d: %s\n", i, strerror(ret));}}printf("\nAll VCPUs completed\n");/* Clean up VCPUs */for (int i = 0; i < NUM_VCPUS; i++) {close_fd(vcpus[i].vcpufd);}close_fd(vmfd);close_fd(kvm);return 0;}

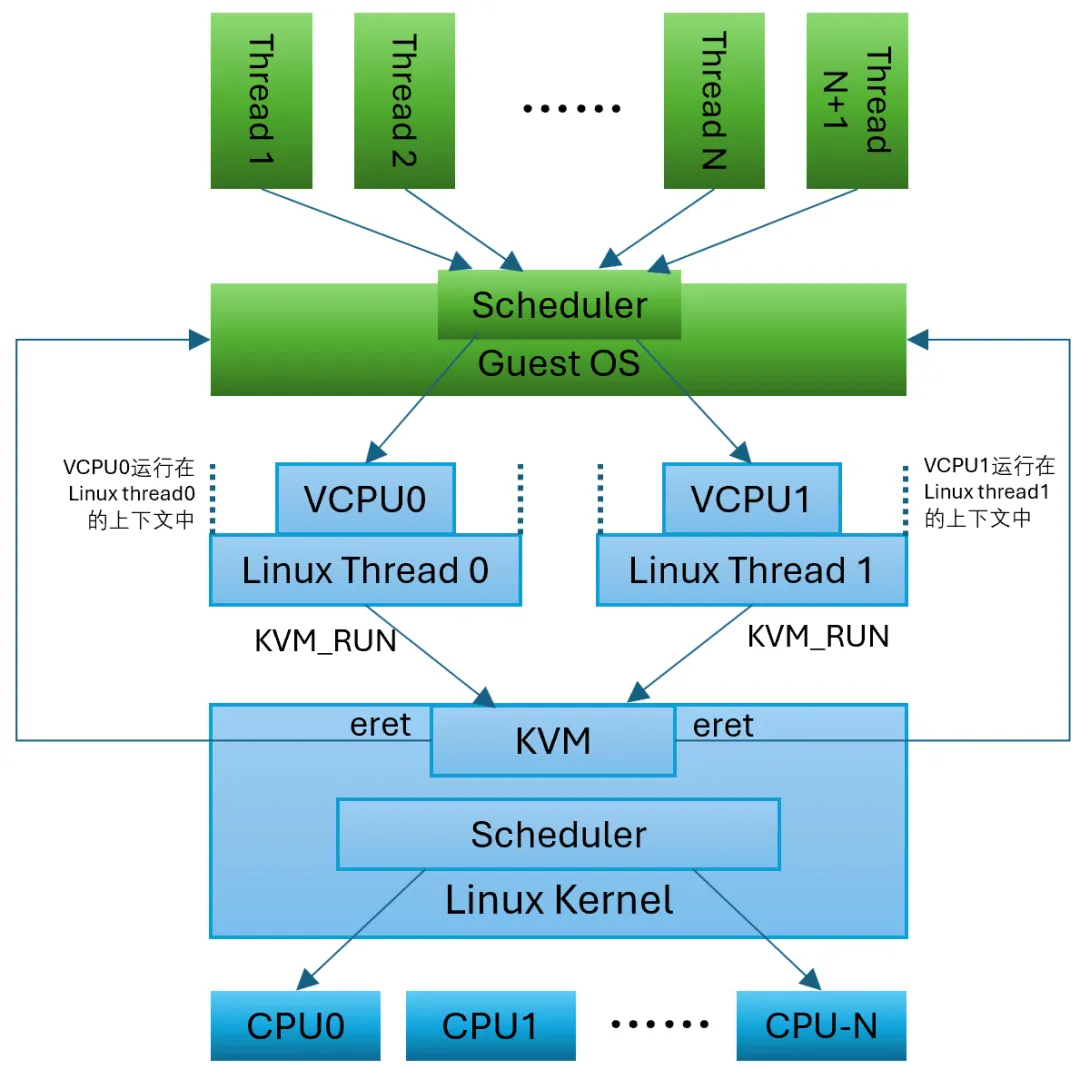

随后创建两个pthread线程,这两个线程将是两个VCPU的上下文,VCPU的执行将局限在这两个线程中:

/*** VCPU thread function - each VCPU runs in its own thread*/void *vcpu_thread(void *arg){struct vcpu_data *vcpu = (struct vcpu_data *)arg;int ret;printf("[VCPU %d] Thread started\n", vcpu->vcpu_id);/* Repeatedly run code and handle VM exits. */bool shut_down = false;for (int i = 0; i < MAX_VM_RUNS && !shut_down; i++) {ret = ioctl(vcpu->vcpufd, KVM_RUN, NULL);if (ret < 0) {printf("[VCPU %d] System call 'KVM_RUN' failed: %d - %s\n", vcpu->vcpu_id, errno, strerror(errno));printf("[VCPU %d] Error Numbers: EINTR=%d; ENOEXEC=%d; ENOSYS=%d; EPERM=%d\n", vcpu->vcpu_id, EINTR, ENOEXEC, ENOSYS, EPERM);return NULL;}pthread_mutex_lock(&print_mutex);printf("\n[VCPU %d] KVM_RUN Loop %d:\n", vcpu->vcpu_id, i+1);switch (vcpu->run->exit_reason) {case KVM_EXIT_MMIO:printf("[VCPU %d] Exit Reason: KVM_EXIT_MMIO\n", vcpu->vcpu_id);if (mmio_exit_handler(vcpu)) {printf("[VCPU %d] Output complete, exiting loop\n", vcpu->vcpu_id);shut_down = true;}break;case KVM_EXIT_SYSTEM_EVENT:// This happens when the VCPU has done a HVC based PSCI call.printf("[VCPU %d] Exit Reason: KVM_EXIT_SYSTEM_EVENT\n", vcpu->vcpu_id);print_system_event_exit_reason(vcpu);shut_down = true;break;case KVM_EXIT_INTR:printf("[VCPU %d] Exit Reason: KVM_EXIT_INTR\n", vcpu->vcpu_id);i--; // Don't count this iterationbreak;case KVM_EXIT_FAIL_ENTRY:printf("[VCPU %d] Exit Reason: KVM_EXIT_FAIL_ENTRY\n", vcpu->vcpu_id);break;case KVM_EXIT_INTERNAL_ERROR:printf("[VCPU %d] Exit Reason: KVM_EXIT_INTERNAL_ERROR\n", vcpu->vcpu_id);break;default:printf("[VCPU %d] Exit Reason: other\n", vcpu->vcpu_id);}pthread_mutex_unlock(&print_mutex);}pthread_mutex_lock(&print_mutex);printf("\n[VCPU %d] VM MMIO Output:\n", vcpu->vcpu_id);for(int i = 0; i < vcpu->mmio_buffer_index; i++) {printf("%c", vcpu->mmio_buffer[i]);}printf("\n");printf("[VCPU %d] Thread finished\n", vcpu->vcpu_id);pthread_mutex_unlock(&print_mutex);return NULL;}

每个线程的具体过程就是循环调用KVM_RUN。KVM_RUN陷入KVM的内核态时,会将VCPU上的程序在pthread的上下文里面通过eret的方式切换运行。而当VCPU上的程序写UART的时候,将产生异常,返回到KVM,进一步返回到调用KVM_RUN的pthread的用户态部分,表示有MMIO的操作。

MMIO的处理函数mmio_exit_handler,做了特殊判断,当看到打印\n的时候,意味着在VCPU上的程序完成打印,从而不会继续通过KVM_RUN调度VCPU的执行了。这里没有使用system_off调用,所以KVM_EXIT_SYSTEM_EVENT的情况不会进入:

/*** Handles a MMIO exit from KVM_RUN.* Returns true if the output is complete (ends with newline).*/boolmmio_exit_handler(struct vcpu_data *vcpu){printf("[VCPU %d] Is Write: %d\n", vcpu->vcpu_id, vcpu->run->mmio.is_write);if (vcpu->run->mmio.is_write) {printf("[VCPU %d] Length: %d\n", vcpu->vcpu_id, vcpu->run->mmio.len);uint64_t data = 0;for (int j = 0; j < vcpu->run->mmio.len; j++) {data |= vcpu->run->mmio.data[j]<<8*j;}vcpu->mmio_buffer[vcpu->mmio_buffer_index] = data;vcpu->mmio_buffer_index++;printf("[VCPU %d] Guest wrote 0x%08lX to 0x%08llX\n", vcpu->vcpu_id, data, vcpu->run->mmio.phys_addr);// Check if output is complete (ends with newline)if (data == '\n') {return true;}}return false;}

运行结果如下:

# ./kvm_testCreating VMSetting up memoryOpening ELF fileIt contains 3 sectionsSection 0 needs to be loadedSection loaded. Host address: 0xffffa9e5e000 - Guest address: 0x00000000Section 1 needs to be loadedSection loaded. Host address: 0xffffa9e56000 - Guest address: 0x04000000Section 2 does not need to be loadedClosing ELF fileRetrieving physical CPU informationEntry address: 0x00000000Creating VCPU 0Initializing VCPU 0Setting MPIDR_EL1 for VCPU 0 to 0x80000000Warning: Could not set MPIDR_EL1 for VCPU 0 (this is normal, KVM sets it automatically)Setting program counter for VCPU 0 to entry address 0x00000000Creating VCPU 1Initializing VCPU 1Setting MPIDR_EL1 for VCPU 1 to 0x80000001Warning: Could not set MPIDR_EL1 for VCPU 1 (this is normal, KVM sets it automatically)Setting program counter for VCPU 1 to entry address 0x00000000Starting VCPU threads[VCPU 0] Thread startedWaiting for VCPU threads to complete[VCPU 1] Thread started[VCPU 0] KVM_RUN Loop 1:[VCPU 0] Exit Reason: KVM_EXIT_MMIO[VCPU 0] Is Write: 1[VCPU 0] Length: 4[VCPU 0] Guest wrote 0x00000043 to 0x10000000[VCPU 0] KVM_RUN Loop 2:[VCPU 0] Exit Reason: KVM_EXIT_MMIO[VCPU 0] Is Write: 1[VCPU 0] Length: 4[VCPU 0] Guest wrote 0x00000030 to 0x10000000[VCPU 1] KVM_RUN Loop 1:[VCPU 1] Exit Reason: KVM_EXIT_MMIO[VCPU 1] Is Write: 1[VCPU 1] Length: 4[VCPU 1] Guest wrote 0x00000043 to 0x10008000[VCPU 0] KVM_RUN Loop 3:[VCPU 0] Exit Reason: KVM_EXIT_MMIO[VCPU 0] Is Write: 1[VCPU 0] Length: 4[VCPU 0] Guest wrote 0x00000021 to 0x10000000[VCPU 1] KVM_RUN Loop 2:[VCPU 1] Exit Reason: KVM_EXIT_MMIO[VCPU 1] Is Write: 1[VCPU 1] Length: 4[VCPU 1] Guest wrote 0x00000031 to 0x10008000[VCPU 0] KVM_RUN Loop 4:[VCPU 0] Exit Reason: KVM_EXIT_MMIO[VCPU 0] Is Write: 1[VCPU 0] Length: 4[VCPU 0] Guest wrote 0x0000000A to 0x10000000[VCPU 0] Output complete, exiting loop[VCPU 0] VM MMIO Output:C0![VCPU 0] Thread finished[VCPU 1] KVM_RUN Loop 3:[VCPU 1] Exit Reason: KVM_EXIT_MMIO[VCPU 1] Is Write: 1[VCPU 1] Length: 4[VCPU 1] Guest wrote 0x00000021 to 0x10008000[VCPU 1] KVM_RUN Loop 4:[VCPU 1] Exit Reason: KVM_EXIT_MMIO[VCPU 1] Is Write: 1[VCPU 1] Length: 4[VCPU 1] Guest wrote 0x0000000A to 0x10008000[VCPU 1] Output complete, exiting loop[VCPU 1] VM MMIO Output:C1![VCPU 1] Thread finishedAll VCPUs completed